AWS Cloud Concepts

This post was published originally in 2023 for Clound Foundation and updated in 2024 for Solution Architect Certification. Here you will not find any insights or good discussion. This post has only notes got from sources to help to organize the concepts to the tests.

AWS has a lot of certifications and a few of them together defines a Role. You can see all the journeys here. As soon as you are prepared, you can schedule your exam here. Two important benefits are 30 minutes more if you are not a native English speaker, and 50% in your next test.

A optional test is AWS Certified Cloud Practitioner (CLF-C01), and the first mandatory is AWS Certified Solutions Architect Associate (SAA-C03).

The first step to start your journey is creating your account to do your tests. AWS has a HowTo for it. Don't forget to not use the root to do your tasks. You have to create user in AWS IAM service by AWS Management Console. The default region available to the user is North Virginia.

|

|

Some courses:

- Udemy Course: AWS Certified Cloud Practitioner Exam Treining

- Udemy Course: [NEW] Ultimate AWS Certified Cloud Practitioner - 2023

- AWS Certified Cloud Practitioner Practice Exams CLF-C01 2023

- 6 Practice Exams | AWS Certified Cloud Practitioner CLF-C01

- Ultimate AWS Certified Solutions Architect Associate SAA-C03

- AWS Certified Solutions Architect Associate (SAA-C03) Course

- Practice Exam AWS Certified Solutions Architect Associate

- [Cloud Guru] AWS Certified Solutions Architect - Associate (SAA-C03)

Cloud Concepts

Definition: Cloud computing is the on-demand delivery of IT resources over the Internet with pay-as-you-go pricing.

Cloud vs Traditional:

- Cloud: On-demand, Broad network access, Resource pooling, Rapid elasticity, Measured Service.

- Traditional: Requires human involvement, Internal accessibility, limited public presence, Single-tenant, can be virtualized, Limited scalability, Usage is not typically measured

Problems solved: Flexibility; Cost-Effectiveness, Scalability, Elasticity, Agility, High-availability and fault-tolerance

Benefits: Agility, Elasticity, Cost saving (trade fixed expenses for variable expenses), deploy globally in minutes

Advantages of cloud computing

- On-Demand: Pay only when you consume computing resources, and pay only for how much you consume

- Economies of scale: lower pay as-you-go prices

- Elasticity: Scale up and down as required with only a few minutes

- Increase speed and agility: the cost and time it takes to experiment and develop is significantly lower; speed to create resources; experiment quickly; scalable compute capacity

- Stop spending money running and maintaining data centers

- Go global in minutes

- IaaS (Infrastructure as a Service): not responsible by the underline hardware and hypervisor but by the operating system (OS), Data and Application. Ex: EC2, CloudFormation.

- PaaS (Platform as a Service): responsible for the applications and data. The customers only need upload their code/data to create the application. Ex: AWS Elastic Beanstalk; Azure WebApps; Compute App Engine.

- SaaS (Software as a Service): Not manage anything, only use the service (Facebook, Salesforce). Signup an account.

Cloud Computing Deployment Models

- Public Cloud (AWS, Azure, GCP): the resources are owned and operatad by the provider, and the services delivered by internet

- Hybrid Cloud: keep some services on primise. It has the control of sensitive assets and flexibility of the public.

- Private Cloud (on-premise): not exposed; it allows automatize some process but all the management of the stack is responsability of the company. It must incluse self-service, multi-tenancy, metering, and elasticity. Benefits: Complete control, security (keep the data and application in house)

- Multicloud - use private/public from multiple providers

Serverless: technologies for running code, managing data, and integrating applications, all without managing servers. Serverless technologies feature automatic scaling, built-in high availability, and a pay-for-use billing model to increase agility and optimize costs. It eliminates infrastructure management tasks like capacity provisioning, patching and OS maintenance. It not mean no server.

Aditional References:

AWS Global Infrastructure

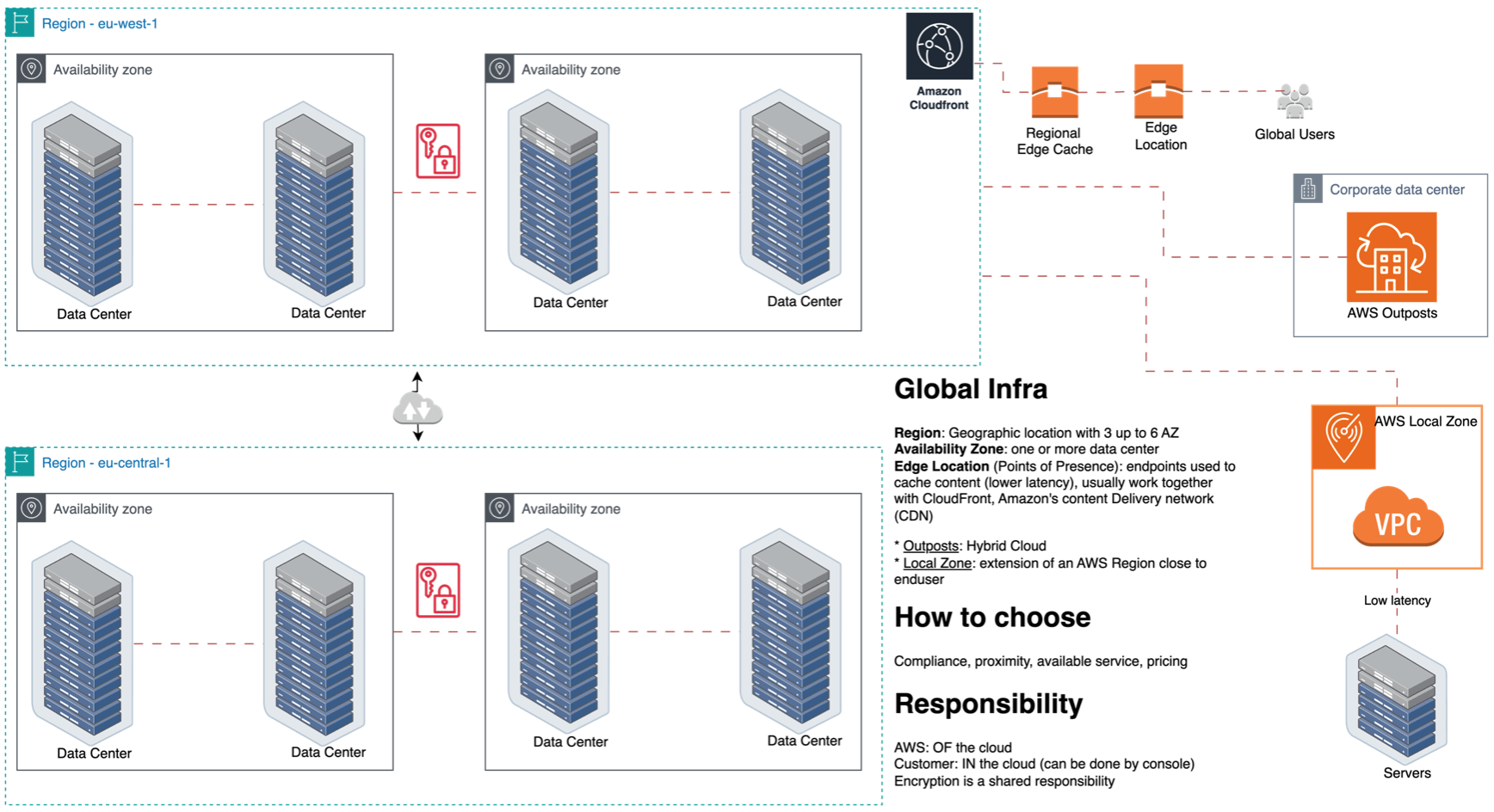

AWS Global Infrastructure: make possible a global application (decrease latency, disaster recovery, attack protection)

- Availability Zones (AZ): one or more discrete data centers with redundant power, networking, and connectivity. Each AZ has independent power, cooling, and physical security and is connected via redundant, ultra-low-latency networks. AZs give customers the ability to operate production applications and databases that are more highly available, fault tolerant, and scalable than would be possible from a single data center. All traffic between AZs is encrypted. AZs are physically separated by a meaningful distance.. Minimum of two AZ to achieve high availability.

- AWS Regions: physical location around the world where we cluster data centers. Each AWS Region is isolated, and physically separate AZs within a geographic area. Minimum of three AZs by region. Criterias to choose the region: Compliance, Proximity to the customer, available service (List of AWS Services Available by Region) and pricing.

- Local Zones: place compute, storage, database, and other select AWS services closer to end-users. Each AWS Local Zone location is an extension of an AWS Region.

- Edge Locations: Content Delivery Network (CDN) endpoints for CloudFront. Delivery content closer the user.

- Regional Edge Caches: between your CloudFront Origin servers and the Edge Locations

- Architecture: Single Region + SingleAZ; Single Region + Multi AZ; Multi Region + Active-Passive; Multi Region + Active-Active

- In Active-Passive failover is possible to apply the routing policy Failover routing

- Virtually any on-premises or edge location

- It brings AWS data center close to on-premises (racks)

- Hybrid cloud, fully managed infra, consistency

- Outposts Racks: Complete Rack (42 U rack)

- Outposts servers: 1U or 2U

- Low latency, local data, data residency, easier migration, fully managed service

Aditional References:

- [DigitalCloud] Global Infrastructure

- [DigitalCloud] AWS Application Integration Services

- Regions and Availability Zones

AWS Well-Architectured Framework

AWS Well-Architectured Framework helps to build secure, high-performing, resilient, and efficient infrastructure [1][2][3][4].

AWS Best Practices

- Scalability (vertical and horizontal)

- Disposable Resources

- Automation (serverless, IaaS,etc)

- Loose Coupling

- Services not Server

- Design for failure -> Distributing workloads across multiple Availability Zones

- Provision capacity for peak load

- Stop guessing the capacity needs

- Test systems at production scale

- Automate

- Evolutionary architecture

- Drive architecture using data

- Simulate applications for flash sale days

- Operational Excellence: run and monitor system

- Design Principles: IaaC annotate doc; frequent, small, reversible changes; refine operations; anticipate failure; learn with failures

- Best Practices: creates, use procedures and validate; collect metrics; continuous change

- Security: protect information, systems and assets

- Design Principles: strong identity foundation; traceability; apply at all layers; automate; protect data in transit and at rest; keep people away from data; prepare for security events

- Best Practice: control who do what; identify incidents; maintain confidentiality and integrity of data

- Reliability: system recover from infra or service disruptions

- Design Principles: test recovery procedures; automatically recover from failure; scale horizontally; stop guessing capacity; manage change in automation

- Best Practices: Foundations, Change Management, Failure Management

- Foundation Services: Amazon VPC, AWS Service Quotas; AWS Trust Advisor

- Change management: CloudWatch, CloudTrail, AWS Config

- Performance Efficiency: use compute resources efficiently

- Design Principles: democratize advanced technology; go global in minutes; experiment more often; Mechanical sympathy

- Best practices: Data-driven approach; review the choices;make trade-offs;

- Cost Optimization: run system to delivery value at the lowest price

- Design Principles: adopt a consumption mode, measure overall efficiency; stop spending money on data center operations; analyze and attribute expenditure; use managed and application level services to reduce cost

- Best Practices: using the appropriate services, resources, and configurations for the specific workloads

- Sustainability (shared responsibility): minimizing the environmental impacts of running cloud workloads

- Design Principles: understand impacts; establish sustainability goals; maximize utilization; anticipate and adopt new solutions; use managed services; reduce downstream impact

IAM - AWS Identity and Access Management

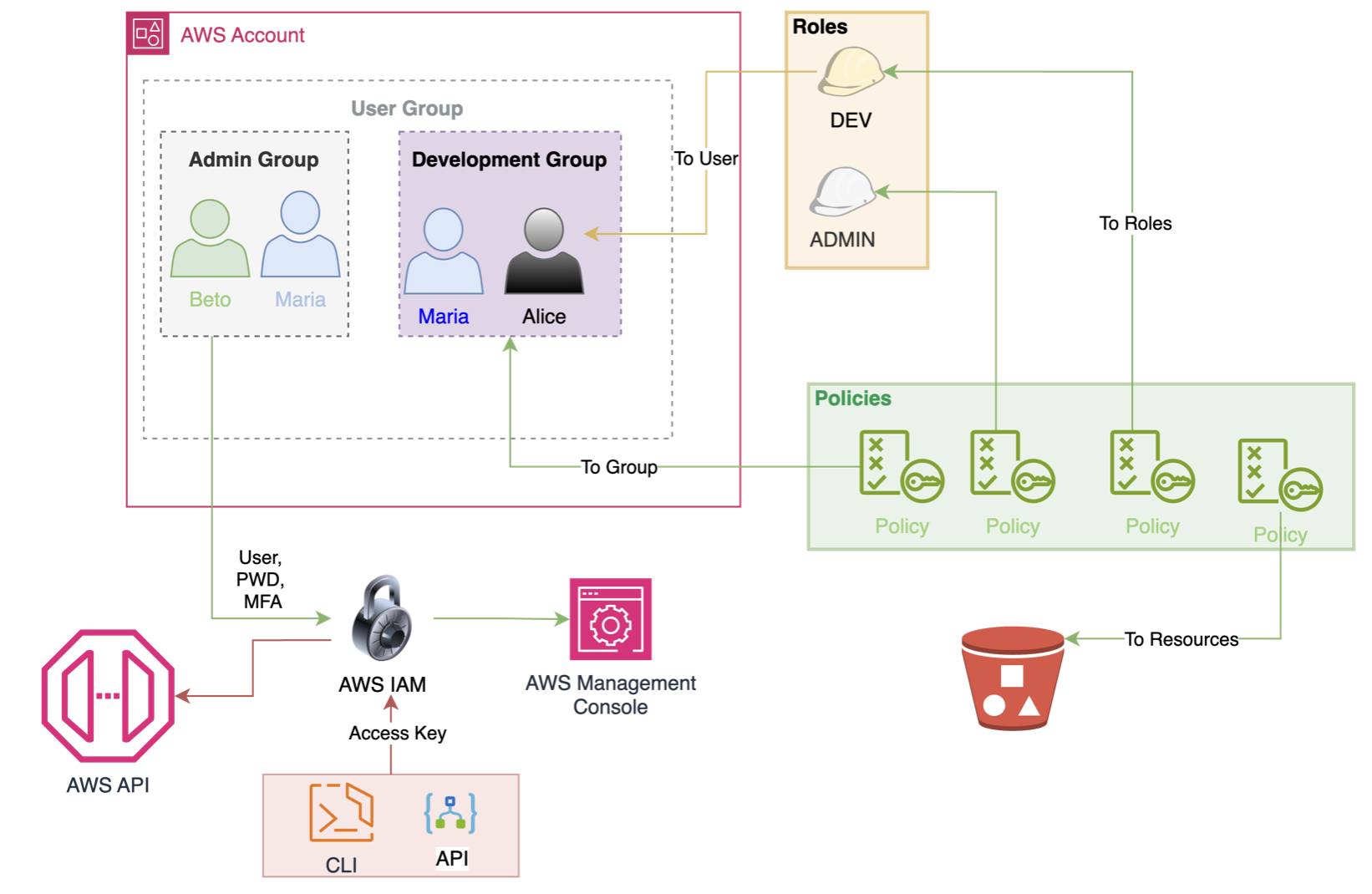

Identify AWS access management (IAM) capabilities. It is the AWS service to specify how people, tools and applications will access AWS services and data [1][2][3].

- IAM is a Global (not apply to regions) service used to control the access to AWS resources (authentication/authorization). It can be used to manage users, groups, access policies, user credentials, user pwd policies, MFA and API keys.

- Root has full administrative permissions and complete access to all AWS services and resource. Actions allowed only to root: change account setting, close account, restore IAM permission, change or cancel AWS support plan, register as a seller, config S3 bucket to enable MFA, edit/delete S3 bucket policies. SCPs can limit the root account.

- When a Identity Federation (AD, facebook, SAML, OpenID) is configured, IAM user account is not necessary

- Power User has a lot of permission but not to manage groups and users in IAM.

- IAM Security Tool:

- IAM Credential Report (account-level): account's users and their credential status. Access it by IAM menu Credential Report

- IAM Access Advisor (user-level): services permissions and last access. Access it by IAM User menu. Use it to identify unnecessary permissions that have been assigned to users.

User is an entity (person or service) created without permissions by default, only login to the AWS console. The permissions must be explicitly given. They log in using user name and password. They can change some configurations or delete resources in your AWS account. Users created to represent an application are known as "service accounts". It's possible to have 5000 users per AWS account.

Groups are a way to organize the users (only) and apply policies (permissions) to a collection of users in the same time. A user can belong to multiple groups. Only users can be part of groups and the group cannot be nested (groups with groups). It is not an identity so cannot be referenced in policies.

Roles delegate permissions. Roles are assumed by users, applications, and services. It can provides temporary security credentials (STS - Security Token Service) for customer role session. Also, the IAM roles make possible to access cross-account resources. It is a trusted entity.

The policy manage access and can be attached to users, groups, roles or resources. When it is associated with an identity or resource it defines their permissions. It is a document written in JSON. The policy is evaluate when a user or role makes a request, and the permission inside that determine if the request is allowed or denied. Best practices: least privilege. The types of policies are: identity-based policies (user, groups, roles), resource-based policies (resource), permissions boundaries (maximum permissions that an identity-based policy can grant to an IAM entity), AWS Organizations service control policy (SCP)(maximum permission for an oganization), access control list (ACL), and session policies (AssumeRole* API action). Policy main elements:

- Version

- Effect: allow/deny

- Action: type of action that should be allowed or denied

- Resource: specifies the object or objects that the policy statement covers

- Condition: circumstances under which the policy grants permission

- Principal: account, user, role, or federated user

IAM authentication is just another way to authenticate the user's credentials while accessing the database.

Access keys are used to programmatic access (API/SDK). It is generated through the AWS Console

SSH key is an IAM feature to allow developer to access AWS services through the AWS CLI.

AWS IAM Identity Center (successor to AWS Single Sign-On) requires a two-way trust so that it has permissions to read user and group information from your domain to synchronize user and group metadata. IAM Identity Center uses this metadata when assigning access to permission sets or applications.

- Use IAM user instead of root user in regular activities

- Add user into groups

- Strong password

- Use MFA

- Create roles for permissions to AWS services

- User Access Keys for programmatic access (CLI/SDK)

- Audit permissions through IAM Credential Reports and IAM Access Advisor

- Protectect your access key

- Prefer customer managed policies (managed policies cannot be edited)

- Use roles for applications that run EC2 and delegate permissions

- Rotate credentials

- Give only credentials that is really needed (Least privilege)

Good Reads:

- IAM roles for Amazon EC2

- AWS Identity and Access Management

- Using IAM authentication to connect with pgAdmin Amazon Aurora PostgreSQL or Amazon RDS for PostgreSQL

- Policies and permissions in AWS Identity and Access Management

- How can I use IAM roles to restrict API calls from specific IP addresses to the AWS Management Console?

- Set an account password policy for IAM users

- Amazon ECS task IAM role

- Manage IAM Roles

- Policy evaluation logic

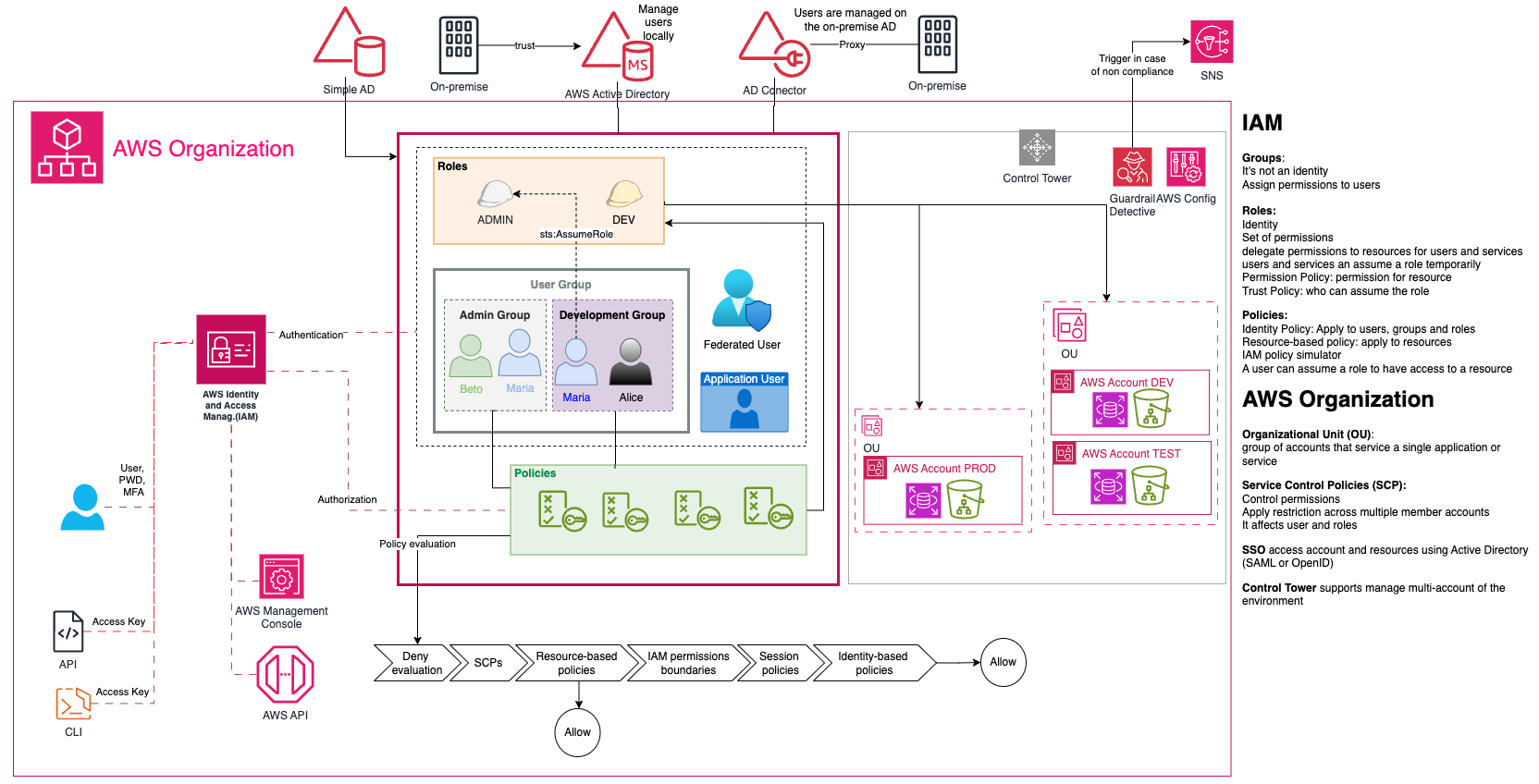

AWS Organization and Control Tower

- it is a collection of AWS accounts where is possible manage these accounts, apply polices, delegate responsibilities, apply SSO, share resources within the organization, use CloudTrail across the accounts.

- RAM easily share resources across AWS accounts. Free to use, pay by shared resources. Participants cannot modify shared resources.

- OU: Organization unit has a logical group of account

- For across-account access is better to create a role instead create a new IAM user. It gives temporary credentials.

- It provides volume discounts or EC2 and S3 aggregated across the member AWS account.

- Consolidate billing: bill for multiple accounts and volume discounts as usage in all accounts is combined, easy to tracking or charges across accounts, combined usege across accounts and sharing of volume pricing discounts, reserved instance discounts and saving plans.

- Service Control Policies (SCPs) is in AWS Organization and can control a lot of available permissions in AWS account, but NOT grant permissions. It can be used to apply the restrictions across multiple member accounts (deny rule). It affects only IAM users and roles (not resources policies)

- You can make new accounts using AWS Organizations however the easiest way to do this is by using the AWS Control Tower service.

- It is over organization and give support to some adicional features, as create Landing Zone (multi-account baseline) and CT will deploy it.

- it set up and govern a secure and compliant multi-account AWS environment.

- Monitor compliance through a dashboard. Supports Preventive Guardrail using SCP (e.g, restrict regions across accounts); and Detective Gardrail using AWS Config (e.g, identity untagged resources).

- Features:

- Landing Zone: well-architected, multi-account environment based on compliance and security best practices.

- Guardrails: high-level rules providing continuous governance -> preventive (ensures accounts maintain governance by disallowing violation actions; leverages service control policies; status of enforced or not enabled; supported in all Regions) and Detective (detects and alerts whithin all accounts; leverages AWS config rules; status of clear, in violation, or not enabled; apply to some regions)

- Account Factory: configurable account template

- CloudFormation StackSet: automated deployment of templates

- Shared accounts: three accounts used by Control Tower created during landing zone creation

EC2 - Elastic Compute Cloud

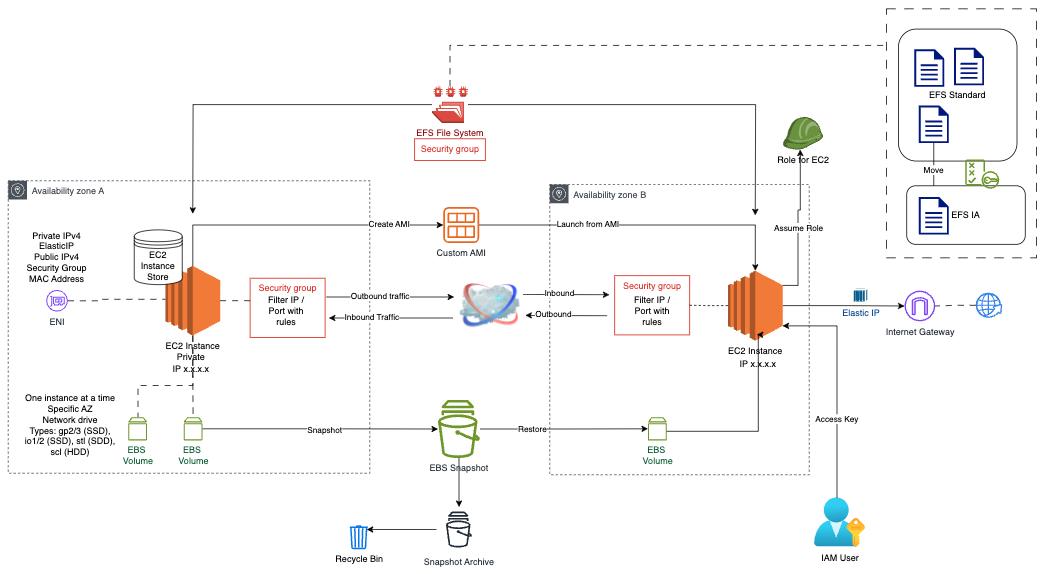

Amazon EC2 (Elastic Compute Cloud)[1][2] is a virtual machine that is managed by AWS.

- IaaS (Infrastructure as a service)

- A new instance combine CPU, memory, Storage and networking. The different types were created to optimize different use case. The `t2.micro` is a general example of a type of instance. The first letter 't' represent the instance class, the number '2' represents the generation, and the last part is the size. Each category try to balance different characteristics:

- compute: require high performance (batch, media transcoding, HPC, machine learning, gaming) - Ex. C8g

- memory: process large data sets in memory (relational/non-relational database, distributed cache, in-memory database for BI, real-time unstructured data) - Ex. R8g

- storage: high, sequencial read and write access to large data sets on local storage (OLTP, relational/non-relational database, cache, data wharehouse, distributed file systems) - Ex. I4g

- networking

- Secure, resizable compute capacity in the cloud. Designed to make web-scale cloud computing easier for developers

- It can run virtual server instances in the cloud

- Each instance can run Windows/Linux/MacOS

- It can storing data (EBS/EFS), distributing load (ELB), scaling services (ASG)

- Volumes: EBS (persist) and Instance Store (Non-Persistent)

- Bootstrap scripts: script that runs when the instance first runs (EC2 User data scripts). It can install updates, softwares, etc. Those scripts run with root user.

- Instance metadata is information about the instance. User data and metadata are not encrypted. The metadata is available at http://169.254.169.254/latest/meta-data. To review scripts used to bootstrap the instances at runtime you can access http://169.254.169.254/latest/user-data

- When the instance is stopped and started again the public IP will change. The private IP not change.

- If you have a legacy, the EC2 instance is a good solution to migrate to cloud that is right-sized (right amount of resources for the application)

- Key pair to access EC2: public key (stored in AQS) + private key file (stored locally). It is used to connect to EC2 instance.

- Get metrics in CloudWatch, logs in CloudTrail

- Shared Responsability

- AWS: Infrastructure (global network security), Isolation on physical hosts, Replacing hoardware, Compliance Validation

- Customer: Security Groups rules, OS patches and updates, Software and utilities installed on the EC2 instance, IAM Roles assigned to EC2 and IAM user access management, Data security on your instance.

VMWare on AWS: for hybrid cloud, cloud migration, disaster recovery, leverage AWS.

Amazon EC2 Spot Instances

- Let you take advantage of unused EC2 capacity in the AWS cloud. Spot Instances are available at up to a 90% discount compared to On-Demand prices. You can use Spot Instances for various stateless, fault-tolerant, or flexible applications such as big data, containerized workloads, CI/CD, web servers, high-performance computing (HPC), and test & development workloads.

- Useful for workloads resilient to failure (batch, data analysis, Image processing, distributed workload, CI/CD and testing). However, it is not suitable for critical jobs or persistent workload and databases.

- Useful when workload is not immediate and can be stopped for a moment and continue from that point after

- Spot fleed: collection of spot on-demand. It will try and match the target capacity with your price restraints. Strategies: capacityOptimized, lowestPrice, diversified, InstancePoolsToUseCount

- It creates Virtual Machine or container images

- Automate the creation, maintain, validate and test EC2 AMIs

- The execution can be scheduled and after the process the AMI can be distributed (multiple regions)

Amazon AMI (Amazon Machine Image)

- Template of root volume + launch permissions + block device mapping the volumes to attach

- Launch EC2 - one or more pre-configured instance

- It can be customized

- it is build for a specific region. The AMI must be in the same region as that of the EC2 instance to be launched; but can be copied to another one where want to create another instance.

- It can be copied to other regions by the console, command line, or the API

- An EBS snapshot is created when an AMI is builded

- Category:

- Amazon EBS: created from an Amazon EBS snapshot. It can be stopped. The data is not lost if stop or reboot. By default, the root device volume will be deleted on termination

- Instance Store: created from a template stored in S3. If delete the instance the volume will be deleted as well. If the instance fails you lose data, if reboot the data is not lost. It cannot stop the volume.

EC2 Hibernate: suspende to disk. Hibernation saves the contents from RAM to EBS root volume. When start again, EBS root volume is restored; RAM contents are reloaded. Faster to boot up. Maximun days an instance can be in hibernation: 60 days.

Storage:

-

EC2 Instance Store is an alternative to EBS with a high-performance hardware disk, better I/O performance. However, it lose their storage when they stop (but not when reboot). So, the best scenarios to be used are, e.g, buffer, cache, temporary content.

- AWS: Infrastructure, Replication for data to EBS volumes and EFS drives, replacing faulty hardware, Ensuring their employees cannot access your data.

- Customer: backups and snapshot procesures, data encryptation, analysis the risk

-

EBS - Amazon Elastic Block Store [1][2][3][4]

- EBS Volume: attached to one instance.

- Designed for mission-critical workload

- High Availability: Automatically replicated within a single AZ

- Scalable: dynamically increase capacity and change the volume type with no impact

- The EBS volumes not need to be attached to an instance. There is the root volume. Good practice create your own volume.

- It allows the instance to persist data even after termination, however, Root EBS volumes are deleted on termination by default

- The EBS volumes cannot be accessed simultaneously by multiple EC2 instance (only with constrains): Attach a volume to multiple instances with Amazon EBS Multi-Attach (Same AZ, only to SSD volume, allowed only in some regions, and others restrictions)

- It can be mounted to one instance at a time and can be attached and detached from EC2 instance to another quickly. However it is locked to an AZ. To move to another AZ is necessary to create a snapshot and it can be copy across AZ or Region.

- A snapshot is a backup of the EBS Volume at a point in time. The snapshots are stored on Amazon S3 and they are incremental. EBS Snapshot features are EBS Snapshot Archive and Recycle Bin for EBS Snapshot. The process with snapshots (creating, deletion, updates) can be automated with DLM (Data Lifecycle Manager).

- It has a limited performance.

- Pricing: Volumes type (performance); storage volume in GB per month provisioned; Snapshots (data storage per month); Data Transfer (OUT)

- EBS Volume Types:

- gp2/gp3 (SSD): general puerpose; balance between price and performance; 3K IOPS and 125 MB/s (up to 16K IOPS and 1K MiB/s). Use cases: high performance at a low cost (MySQL, virtual desktop, Boot volumes, dev and test env).

- io1 (SSD): high perfomance and most expensive. 64K IOPs per volume, 50 IOPS per GiB. critical low latency or high throughput; Use cases: large database, I/O-intensive database workloads

- io2 (SSD): Higher durability. 500 IOPS per GiB (same price of io1). I/O intensive apps, large database

- st1(HDD): low cost, use case: big data, data warehouse, log processing;

- sc1 (HDD): lowest cost; Throughput-oriented storage for data that is infrequently accessed.

- RAID: RAID array uses multiple EBS volumes to improve performance or redundancy

- RAID 0: I/O performance

- RAID 1: Fault tolerance. Creates a mirror of the data

- Encryption: use KMS; if the volume is created encrypted the data in trasit is encrypted, the snapshots are encrypted, the volumes created from snapshots are encrypted. A copy of an uncrypted volume can be encrypted. But cannot enable encryption after it is launch.

-

EFS - Amazon Elastic File System [1][2][3]

- Network File System (NFS) for Linux instances and linux-based applications in multi-AZ.

- Shared File storage service using EC2.

- It is considered highly available, scalable, expensive (pay per use).

- Easy to set up, scale, and cost-optimize file storage in the Amazon Cloud

- Tiers: frequent access (Standard) and not frequent access (IA)

- EFS Infrequent Access (EFS-IA) is a storage class that is cost-optimized for files not accessed and has lower cost than EFS standard. It is based on the last access. You can use a lifecycle policy to move a file from EFS Standard to EFS-IA (e.g: AFTER_7_DAYS).

- Encryption at rest using KMS

- Replication: EFS can be used with AWS Backup for automated and centralized backup across AWS services, and supports replication to another region. All replication traffic stays on the AWS global backbone, and most changes are replicated within a minute, with an overall Recovery Point Objective (RPO) of 15 minutes for most file systems

- Amazon FSx

- for Windows File Server: fully managed Microsoft Windows file servers, manage native Microsoft windows file system. Make the migration easy

- for Lustre: managed file system that is optimized for compute-intensive workloads (HPC, Machine Learning, Media data Processing). Fo Linux File System. It can store data in S3.

Network:

- ENI (Elastic Network Interface): Virtual Network card

- Attributes: Primary and secondary IPv4 address, Elastic IPv4, public IPv4/IPv6, security group, MAC address, source/destination check flag, description

- Attached to instances

- eth0 is ENI created when an Ec2 instance is launched

- Use cases: create a management network; use network and security compliances in your VPC; create dual-homed instances with workloads/roles on distinct subnets; create a low-budget, high-availability solution

- ENL (Enhaneed Networking): uses single root I/O virtualization (SR-IOV) to provide high performance (1-Gbps - 100 Gbps)

- ENA (Elastic Network Adapter): it enable Enhanced network which provides higher bandwidth, higher packet-per-second (PPS) performance, and consistently lower inter-instance latencies (up to 100 Gbps)

- VF (Intel 82599 Virtual Function Interface): For older instances (up to 10 Gbps)

- EFA (Elastic Fabric Adapter): ENA with more capabilities. It is a network interface for Amazon EC2 instances that enables customers to run applications requiring high levels of inter-node communications at scale on AWS.

Security

Security Group (SG):

- Virtual firewall to ENI/EC2 instance

- Instance level (can be attached to multiple instances)

- Applied to the network security, controlling the traffic into or out of the EC2 instance

- By default, inbound traffic is blocked and outbound traffic is authorised

- ALLOW rule: It contains only rules and these rules can reference by IP or by security group.

- They regulate access to Ports and authorised IP ranges.

- Stateful: if there is an inbount rule that allow the traffic, the outbound automatically allowed without rules; if the outgoing request is done by instance and the rule allow the outbound traffic then the inbound return is automatically allowed. Supports only allow rules. Security Groups can be associated with a NAT instance. The rules are evaluated before deciding whether to allow traffic.

- Locked down to a region/VPC combination.

- Protect against low level network attack like UDP floods.

- A good practices is to create a separate security group for SSH access.

- Tips: errors with time out is a security group issue; error of connection refused can be an application error.

- Security groups can be changed for an instance when the instance is in the running or stopped state.

Peformance

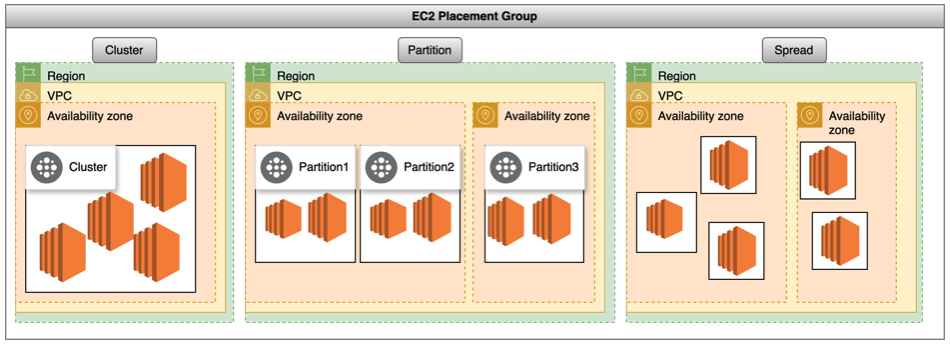

Placement groups: It is an strategy of optimization to meet the needs of your workload which you can launch a group of interdependent EC2 instances into a placement group to influence their placement. It can be:

- Cluster: grouping of instances within a single AZ. Low latency and high throughput. It can't span multiple Azs

- Partition: set of racks that each rack has its own network and power source. Multiple EC2 instance

- Spread: group of instances that are each placed on distinct underlying hardware. Small number of critical instances that should be separate from each other. A spread placement group can span multiple Availability Zones in the same Region. You can have a maximum of seven running instances per Availability Zone per group.

EC2 Pricing: the price for it depends the instance (number, type), load balance, IP adreess, etc. You can use AWS Pricing Calculator to simulate to cost.

- On-Demand: short workload, predictable pricing, billing per second/hour, pay for what you use, highest cost, no discount. Best use to short-term and un-interrupted worloads.

- On-Demand Capacity Reservations enable to reserve compute capacity for EC2 instances in a specific AZ for any duration. You always have access to EC2 capacity when you need it, for as long as you need it. You can create Capacity Reservations at any time, without entering a one-year or three-year term commitment, and the capacity is available immediately.

- Reservations (1-3 years): predicted workload. Various services like Ec2, DynamoDB, ElastiCache, RDS and RedShift. Pay up Front. The remaining term of the reserved instances can be sold on Marketplace

- Reserved instances (RI): long workloads; has a big discount and has as scope Regional or Zonal. Indicated for steady-state usage application. It cannot be interrupted (up to 72% off the on-demand price)

- Convertible Reserved Instances: long workload with flexible instances; gives a big discount. This model change the attributes of the RI as long as the exchange results in the creation of RIs of equal or greater value (up to 54% off the on-demand price)

- EC2 Savings Plan: reduce compute cost based on long term (1-3y). Locked to a specific instance family and region. Lot of flexibility (EC2, Fargate, Lambda). No Upfront or Partial Upfront or All Upfront Payments

- Spot Instance: High discount (up to 90%). It is the most cost-efficient instances in AWS. Urgent Capacity; Flexible; Cost Sensitive. Use for app with flexible start and end times; app with low compute prices (Image rendering, Genomic sequence). Not use if need a guarantee of time.

- Dedicated host (single customer, your VPC): physical server with EC2 instance dedicated, can use your own licenses. It can be purchasing On-Demand or Reserved. It is the most expensive.

- Dedicated Instance: single customer, isolated hardware dedicated to your application, but this hardware can be shared with other instances in the same account. Compliance, Licensing, on-Demand, Reserved.

- Minimum charge: one-minute for Linux based EC2 instances.

Aditional References:

High Availability and Scaling

These are features to be used to ensure elasticity and high availability. They can be used together.

Scalability

- Handle greater loads by adapting

- Scale Up: scale by adding more power (CPU/RAM) to existent machine/node. Operation running on only one computer.

- Scale Out: scale by adding more instance to existent pool of resources. Fault Tolerance is achieved by scale out operation.

- Scale In: decrease the number of instances.

- Vertical: inscrease the size of the instance. Common for non distributed system. Limited, e.g, by hardware.

- Horizontal [1]: increase the number of instances. Distributed system. Common for web applications. Auto Scaling Group and Load Balancer [2]. Instances that are launched by your Auto Scaling group are automatically registered with the load balance[3].

- High Availability: Direct relatioship with horizontal scalability. No interruption even with failover. Run across multi AZ, at least in 2 AZ

Set Up

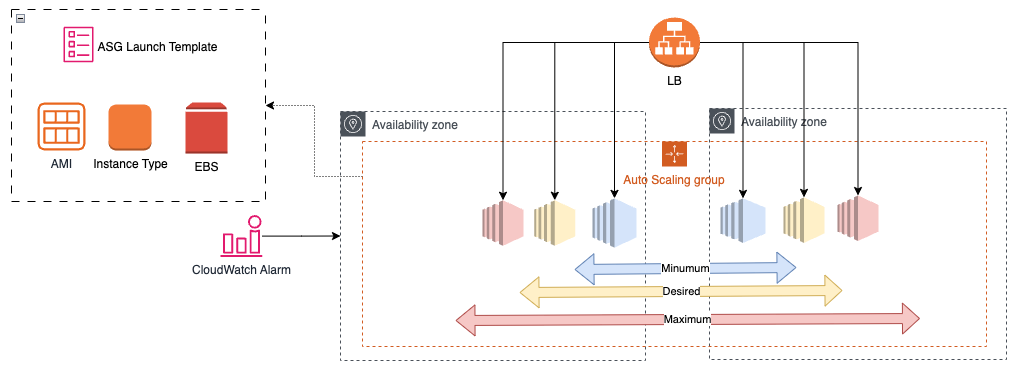

- Launch Template: all the settings needed to build an EC2 instance; for all EC2 auto scaling features; supports versioning; more granularity; recommended. The specification of a network interface has considerations and limitations that need to be taken into account in order to avoid errors. [1]

- Launch Configuration: only certain EC2 Auto Scaling feature; immutable; limited configuration options; specific use cases

Auto Scaling: create and remove instance when is necessary. It can use a launch configuration (an instance configuration template) that an Auto Scaling Group uses to launch Amazon EC2 instances. [1][2][3]

- ASG contains a collection of EC2 instance (logical group)

- Replace unhealthy instances.

- Only run at an optimal capacity.

- AWS EC2 Auto Scaling provides elasticity and scalability.

- High availability can be achieved with Auto Scaling balancing your EC2 count across the AZs

- Scaling Policies: minimum, maximum and desired capacity

- Step scaling policy: launch resources in response to demand. It's not a guarantee the resources are ready when necessary

- Simple Scaling Policy: Relies on metrics for scaling needs, e.g., add 1 instance when CPU utilization metric > 70%.

- Target Tracking Policy: Use scaling metrics and value that ASG should maintain at all times (increases or decreases the number of tasks that your service runs based on a target value for a specific metric.), e.g, Maitain ASGAverageCPUUtilization equal 50%

- Instance Warm-Up: stops instances behind load balancer, failing the helath check and being terminated prematuraly

- Cooldown: pause AS for a set amount of time to not launch or terminate instances;

- Avoid Thrashing: create instance very fast

- Scaling types:

- Reactive scaling: Monitors and automatically adjusts the capacity; predictable performance at the lowest possible cost. It, e.g, add/remove (Scale out/in) EC2 instances when the load is increased/decreased.

- Scheduled Scaling (predictable workflow) can be configured for known increase in app traffic.

- Predictive Scaling: uses daily and weekly trends to determine when scale

- Strategy: Manual or Dynamic (1. SimpleStep Scaling (CloudWatch); 2.Target Tracking Scaling; 3. Scheduled Scaling

Scaling Relational Database

- Vertical Scaling: resize the database

- Scaling Storage: resize storage to go up, but is not able scale back down (RDS, Autora)

- Read Replicas: realy only copies to spread out the workload. Use multiple zones.

- Aurora Serverless: offload scaling to AWS. Unpredictable workloads. Aurora is the only engine that offers a serverless scaling option.

Scaling Non-Relational Database

- AWS do this

- Types:

- Provisioned: predictable workflow; need to review past usage to set upper and lower scaling bounds; most cost-effective model

- On-Demand: sporadic workflow; less cost effective;

- Concepts:

- Read Capacity Unit (RCU): DynamoDB unit of measure for reads per second for an item up to 4KB in size. As an example: if you have objects that are 7KB in size, then will be necessary 2 RCU for 1 strongly consistent read per second (1 RCU = 4KB/1 Strongly Consistent Read -> 2 RCU = 8KB)

- Write Capacity Unit (WCU): DynamoDB unit of measure for writes per second for an item up to 1KB in size. As an example: if you have an object that are 3KB in size, then will be necessary 3 WCU (1 WCU = 1KB * Write per Second -> 3 KB * 1 WCU = 3 WCUs )

Disaster Recovery

- RPO - Recovery Point Objective: point in time to recover (24h, 5 minutes, etc) (How often you run backups - back in time to get the data to recover)

- RTO - Recovery Time Objective: how fast to recover; how long the business support (when recover after disaster)

- Strategies

- Backup and Restore: Restore from a snapshot (Chepest but slowest). RPO/RTO: hours. Ative/Passive.

- Pilot Light: replicate part of your IT structure for a limited set of core services. At the moment to recovery, you can rapidly provision a full-scale production environment around the critical core (faster than backup and restore but some downtime). RPO/RTO: 10s. Ative/Passive.

- Warm Standby: provision the services necessary to keep the applications up. It is a scaled-down version of a fully functional environment is always running in the cloud. The application can handle traffic (at reduced capacity levels) immediately so this will reduce the RTO (quicker recovery time than Pilot Light but more expensive). RPO/RTO: minutes. Ative/Passive.

- Multi Site / Hot Site Approach: low RTO (expensive); full production scale is running AWS and on-premise. RPO/RTO: real time. Ative/Active.

- All AWS Multi Region - the best approach for Data replication is use Aurora Global

- Active/Active Failover: is necessary to have a complete duplicated services (the most expensive but no downtime but lowest RTO and RPO)

- AWS Elastic Disaster Recovery(DRS): recover physical, virtual and cloud-based servers into AWS

AWS Backup:

- Supports PITR for supported services

- It can be done on-demand or schedule

- Cross-Region/Cros-Account backups

- Tag-based backup policies

- Backup Plans - frequency, retention

- Backup Vault Lock: enforce WARM (WriteOnceReadMany) for backups

AWS networking services

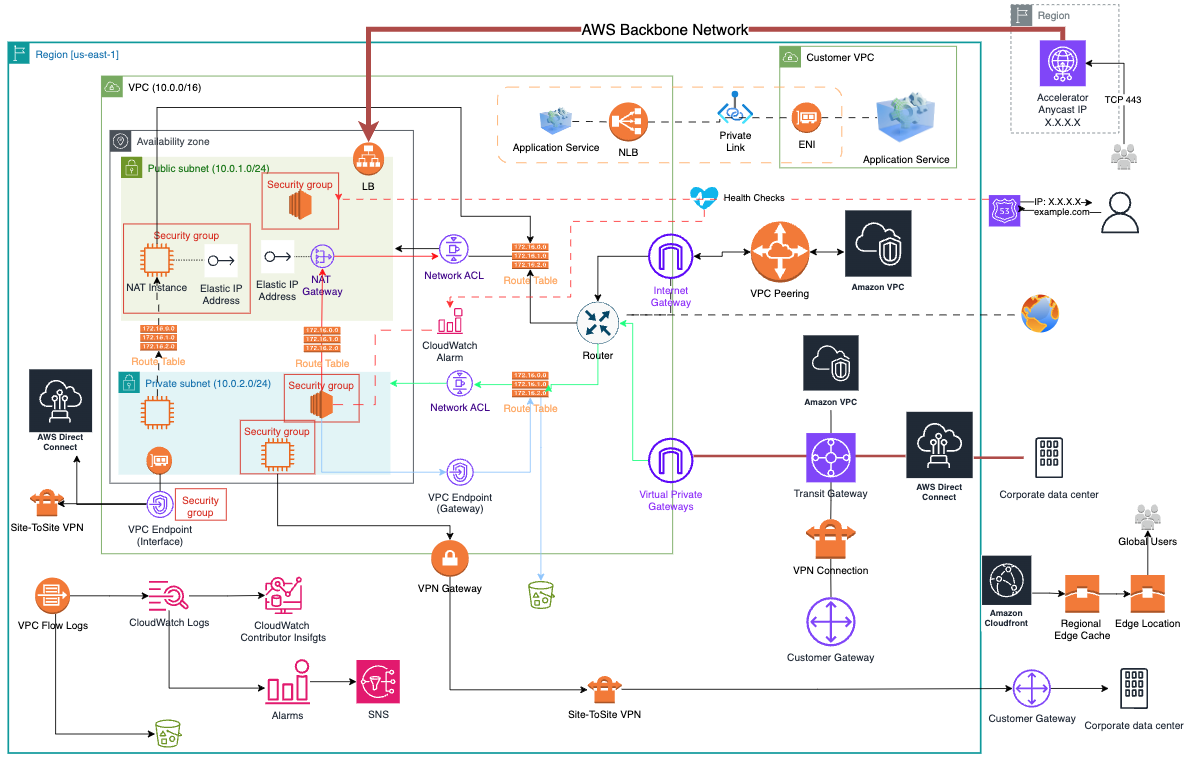

VPC (Virtual Private Cloud): isolated network in AWS cloud that can be fully customized. It is a virtual data center. A range of IP[1][2] is defined when the VPC is created [3][4][5][6]

- Subnet: partition of the network inside the VPC and AZ. The public is accessible from the internet. Instances are launch into subnets. Two subnets configured in one AZ (High avalilability)

- Elastic IP: static IP for a public IP in EC2 instance

- Route Tables: make possible the access of the internet and between subnets.

- Internet Gateways: helps VPC to connect to internet. The public subnet has a route to the internet gateway, but private subnet does NOT have a route to Internet Gateway.

- NAT instance (self-managed): allows the instance inside the private Subnets to access the internet or other services. But denying inbound traffic from internet. Automatically assigned a public IP (elastic IP). It is Reduntand inside AZ. NAT instance supports port forwarding and it's associated with security groups.

- NAT Gateway (AWS-managed) does not need to patch. A NAT gateway is used for outbound traffic not inbound traffic and cannot make the application available to internet-based clients.

- VPC Endpoint: connect to AWS services using private Network. It can be combined with PrivateLink and is not necessary NAT, gateways,etc. It is not leaving AWS environment. They are horizontaly scaled, redundant, and highly available.

- Interface endpoints: uses Elastic Eetwork Interface (ENI) with private IP redirected by DNS; supports many services; use Security Groups

- Gateway Endpoints: virtual device you provision; configure route table to redirect the traffic; similar to NAT GW; supports connection to S3 and DynamoDB; All traffic that go through the VPC endpoint go direct to DynamoDB or S3 using private IP; use VPC Endpoint Policies

- Network ACL (Access Control List): it is the first line of defense. It is subnet level: firewall to subnets (only IP), controlling traffic in and out of one or more subnets. Stateless: have to allow inbound and outbound traffic (checks for an allow rule for both connections). Supports allow and deny rules. Customer is responsible for configure it. The default ACLs allows all outbound and inbound traffic. The custom ACL denies inbound and outbound traffic by default. A subnet will be associated with the default ACL. A subnet is associated with only one ACL but ACL can be associated with multiple subnets. Rules are evaluating in order starting with the lowest number rule (first match wins).

VPCs Connections

- VPC Peering [1]: connect two VPC via direct network route using private IP; cross account and region, no transitive peering, must not have overlapping CIDRS. It can use Security Group cross account but not cross region. For cross region, the security group has to allow traffic from the second region using application server IP addresses

- PrivateLink: provides private connectivity between VPCs, AWS services, and your on-premises networks, without exposing your traffic to the public internet. It is the best option to expose a service to many of customer VPC. It does not need VPC peering, or route tables or Gateways. It needs a Network Load Balancer (NLB) on the service VPC and an ENI on the customer VPC.

- VPM CloudHub: Multiple sites with its own VPN connection. The traffic is encrypted.

- VPN:

- VPN - Virtual Private Network[1]: Establish secure connections between your on-premises networks and VPC using a secure and private connection with IPsec and TLS. Encrypted network connectivity. Over public internet

- Site to Site VPN: it connects two VPCs via VPN. It needs Virtual Private Gateway (VPG), a VPN concentrator on the AWS side of VPN connection; and a customer Gateway (CGW) in the customer side of the VPN. It can be used as a backup connection in case DX fail. Over the public internet

- AWS Managed VPN: Tunnels from VPC to on premises

- Virtual Private Gateway (VPN Gateway): connect one VPC to customer network. It is used to setup an AWS VPN ( logical, fully redundant distributed edge routing function that sits at the edge of your VPC)

- Customer Gateway: installed in customer network

- Client VPN: connect to your computer using OpenVPN. Connect to EC2 instance over a private IP.

- Direct Connect (DX)[3][4]: physical connection (private) between on premises and AWS. Types: dedicated network connection (physical ethernet connection associated with a single customer) or hosted connection (provisioned by a partner). The most resilient solution is to configure DX connections at multiple DX locations. This ensures that any issues impacting a single DX location do not affect availability of the network connectivity to AWS. No public internet. The company should use AWS Transit Gateway. Using only DX data in transit is not encrypted but is private; DX + VPN provides an IPSec-encrypted private connection. Resiliency: Use two Direct Connection locations each one with two independent connections.

- AWS Transit Gateway [1]: connect VPC and on-premise network using a central hub working as a router. It allows a transitive peering; works on a hub-and-spoke model; works on a regional basis (cannot have it across multiple regions but can use it across multiple accounts.)

5G Networking with AWS WaveLength: Infrastructure embedded within the telecommunication provides datacenters at 5G network

AWS Private 5G is a managed service that makes it easy to deploy, operate, and scale your own private cellular network, with all required hardware and software provided by AWS.

VPC Flow Logs: capture information about the IP traffic going to and from network interfaces in your VPC. For this, configure the Bastion Host security Group to allows inbound from internet on port 22.

Bastion Host is a instance in public subnet that handle the communication between internet and one or more EC2 instance in a private subnet via ssh.

VPC sharing allows multiple AWS accounts to create their application resources into shared and centrally-managed Amazon VPCs. To set this up, the account that owns the VPC (owner) shares one or more subnets with other accounts (participants) that belong to the same organization from AWS Organizations. After a subnet is shared, the participants can view, create, modify, and delete their application resources in the subnets shared with them. Participants cannot view, modify, or delete resources that belong to other participants or the VPC owner. You can share Amazon VPCs to leverage the implicit routing within a VPC for applications that require a high degree of interconnectivity and are within the same trust boundaries. If is necessary to restrict access for a specific instance so the consumers cannot connect to other instances in the VPC the best solution is to use PrivateLink to create an endpoint for the application. The endpoint type will be an interface endpoint and it uses an NLB in the shared services VPC

- Global Managed DNS supported by AWS:

- DNS: Convert name to IP

- TTL: time to live

- CNAME (canonical name): map a domain name to another domain name. CAnnot be used for naked domain names

- Alias: Map a host name to an AWS resource. You can't set TTL and cannot set an ALIAS record for an EC2 DNS name. An AWS DNS alias record is a type of record that points a domain name to an AWS resource, such as an Elastic Beanstalk environment, an Amazon CloudFront distribution, or an Amazon S3 bucket. It is used to create subdomains or point a domain name to a different AWS service. [1]

- Record/alias can be used to naked domain names.

- DNS: Port 53

- DNS does not route any traffic but responds to the DNS queries

- Reliability and cost-effective way to route end users

- Helth check: can monitor the health of a specified resource, the status of other health checks, the status of an Amazon CloudWatch alarm. Only for public resources

- It is a hybrid architecture.

- It's not possible to extend Route 53 to on-premises instances.

- Paied for hosted zone, queries, traffic flow, health checks, domain name.

- Routing Policies

- Weighted routing policy is used to route traffic to multiple resources (associated with a single domain/subdomain) and to choose how much traffic is routed to each resource (split traffic based on different weights assigned). It can be used, e.g, for load balancing purpose. Assigning 0 to a record will stop the traffic to that resource. Assigning 0 to all records then all records returns equally.

- Simple Routing Policy route the traffic to a single resource. It allows one record with multiple IP; if the record is multiple value the Route 53 returns all values to the user in a random order.

- Geolocation Routing Policy: choose where the traffic will be sent based on the geographic location of the users (which DNS queries originate). Also, can restrict distribution of content.

- Geoproximity Routing: based on geographic location of the resources, and can choose to route more traffic to a given resource.

- Latency Routing Policy: based on the lowest network latency for the end user (which regions will give them the fastest response time)

- Failover routing policy: use primary and standby configuration that sends all traffic to the primary until it fails a health check and sends traffic to the secondary. This solution does not good enough for lowest latency. It is used when you want to create an active/passive set up. It cannot be associated with Health Checks.

- Route 53 can be used to check the health of resources and only return healthy resources in response to DNS queries. Types of DNS failover configurations:

- Active-passive: Route 53 actively returns a primary resource. In case of failure, Route 53 returns the backup resource. Configured using a failover policy.

- Active-active: Route 53 actively returns more than one resource. In case of failure, Route 53 fails back to the healthy resource. Configured using any routing policy besides failover.

- Combination: Multiple routing policies (such as latency-based, weighted, etc.) are combined into a tree to configure more complex DNS failover.

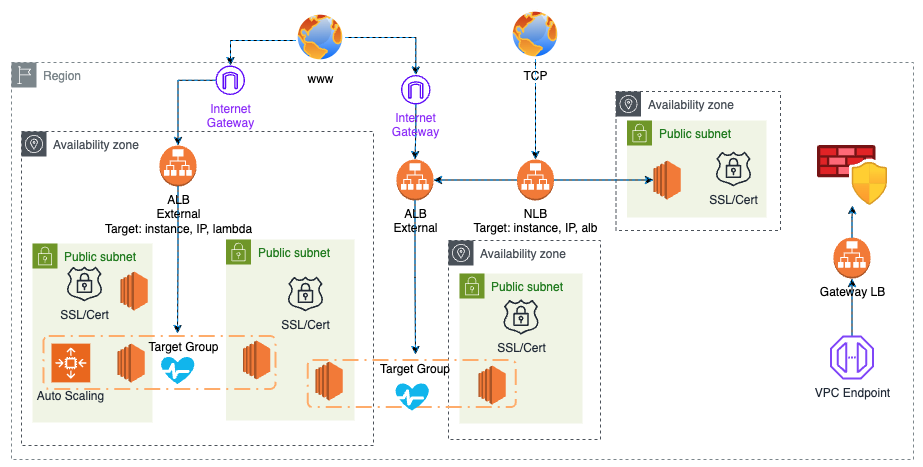

ELB (Elastic Load Balancer): distribute the traffic across healthy instances with targets. [1][2][3]

- It can be across multiple AZs but Single Region

- Internal (private) or external (public)

- Servers that handle the traffic and distribute it across, e.g., EC2 instance, containers and IP address.

- It has only one point of access (DNS).

- Benefits: High availability across zones, automatic scaling and Fault Tolerance.

- AWS responsibilities: guarantees it will work, upgrade, maintenance, high availability

- Types:

- ALB (Application Load Babancer / Inteligent LB): HTTP/S; Static DNS (URL); Layer 7; It is a single point of contact for client. ALBs allow you to route traffic based on the contents of the requests. Distributes incoming application traffic across multiple targets in multiple AZ. Good for microservices and container-based application

- NLB (Network Load Balancer / Performance LB): high performance/low latency (TCP/UDP); static IP throught Elastic IP; layer 4 (connection level). It distributes traffic. When the NLB has only unhealthy registered targets, the Network Load Balancer routes requests to all the registered targets, known as fail-open mode.

- GLB (Gateway Load Balancer / Inline Virtual Appliance LB): route traffic to firewalls managing in EC2 instance (Layer 3);

- Classic: Layer 4 and 7; more used to test or dev. 504 error means gateways has time out

- Sticky Session: redirect to the same instance. Use cookies (LB or Application). Stickiness allows the load balancer to bind a user's session to a specific target within the target group. The stickiness type differs based on the type of cookie used. Can't be turned on if Cross-zone load balancing is off.

- Shared responsibility: AWS is responsable to keep it working, upgrade, maintain, and provide only few configurations.

Performance

- Network service that send users' traffic through AWS's global network infrastructure via accelerators.

- Direct users to different instances of the application in different regions based on latency.

- Improve global application availability and performance

- it will intelligently route traffic to the closest point of presence (reducing latency)

- Increase performance with IP cache. Can help deal with IP caching issues by providing static IPs

- By default, GA provides two static Anycast IP address

- Good when use static IP and need determinist and fast regional failover.

- For TCP and UDP (Major difference from CloudFront)

- Optimize the route to endpoints

- Use Edge Locations to the traffic

- No caching and has proxy packets at the edge

- Integration with Shield for DDoS protection

- Target: EC2 instances or ALB

- Both Route 53 and Global Accelerator can create weights for application endpoints

- Global (and fast) Content Delivery Network (CDN)

- It works with AWS and on-site architecture

- It can block countries, but the best place to do it is WAF. With WAF you must create an ACL that includes the IP restrictions required and then associate the web ACL with the CloudFront distribution.

- If you need to prevent users in specific countries from accessing your content, you can use the CloudFront geo restriction feature

- Replicate part of your application to AWS Edge Locations (content is served at the edge)

- Edge location: location to cache the content

- It can use cache at the edge to reduce latency. Improves read performance

- It's possible to force the expiration of content or use TTL

- CloudFront Functions: you can write lightweight functions in JavaScript for high-scale, latency-sensitive CDN customizations. That functions can manipulate the requests and responses that flow through CloudFront, perform basic authentication and authorization, generate HTTP responses at the edge, and more. It is lower cost than Lambda@Edge.

- Security: Defauls to HTTPS connections and can add custom SSL certificate; DDoS protection, integration with Shield, Firewall. CloudFront signed cookies are a method to control who can access your content.

- Customer origin: ALB, EC2 instance, S3 website

- S3 bucket: distribute files and caching at the edge, security with OAC (Origing Access Control)

- Can be integrated with CloudTrail

- Great for static content that must be available everywhere; in oposite of S3 Cross Region Replication that is great for dynamic content that needs to be available at low latency in few regions

- Pricing: Traffic distribution; Requests; Data transfer out. Price is different for region

- Set price class to determine where in the world the content will be cached.

S3

There are three categories to Storage Service:

- File storage: storage files in a hierarchy

- block storage: storage in a fixed size blocks. Any change only a block is changed

- object storage: storage as a object. Any change then all the objects are changed

S3[1][2] is an AWS Storage service for object. It allows store and retrieve data from anywhere at a low cost. Basically for static files. The files are storage in bukets and is highly available and highly durable. Data is stored redundantly across multiple AZs. The name must be global unique name even the bucket is regional level. S3 is design for Frequent Access.

As characteristics, S3 offer different storage classes for different use cases (Tiered Storage); it has a lifecycle Management automatically to move the objects between different storage tiers or delete them through rules to be more cost effective; and use versions to retrieve old objecs. Also, it has a Strong Read-After-Write Consistency.

- Object store and global file system.

- Used to store any files until 5TB without limits in buckets (directories/containers)

- These objects have a key.

- Virtually unlimited amount of online highly durable object storage.

- Each bucket is inside of a region

- Write-once-read-many (WORM) - prevention of deletion or overwritten

- Use cases: backup, disaster recovery, archive, application hosting, media hosting, Software delivery, static website

- Shared Responsibility

- AWS: Infrastructure (global security, durability, availability), Configuration and vulnerability analysis, Compliance validation, AWS employees can't not access the customer data, separation between customers

- Customer: version, bucket policies, replication, logging and monitoring, storage class, data encryptation, IAM user and roles

- Pricing: Depends the storage class; storage quantity; number of request; transition request; data transfer. Requester payes

Security

- Access Control Lists (ACLs): It is account level control. It defines which account/groups can access and type of access. It can be attach by object or bucket.

- Bucket Policies: It is account level and user level control. It defines who and what is allowed or denied - allows across account

- IAM Policy: It is user level control. A policy attached to a user can give permission to access S3 bucket

- IAM Role for EC2 instance can allow EC2 instance access S3 bucket

- Presigned URL gives access to the object identified in the URL. For this creation, is necessary to provide a security credentials and then specify a bucket name, an object key, an HTTP method (PUT for uploading objects), and an expiration date and time. The presigned URLs are valid only for the specified duration. This is the most secure way to provide the vendor with time-limited access to the log file in the S3 bucket. It can be created by Console or CLI.

- MFA delete: for delete object or suspend version. Only the owner can enbled it.

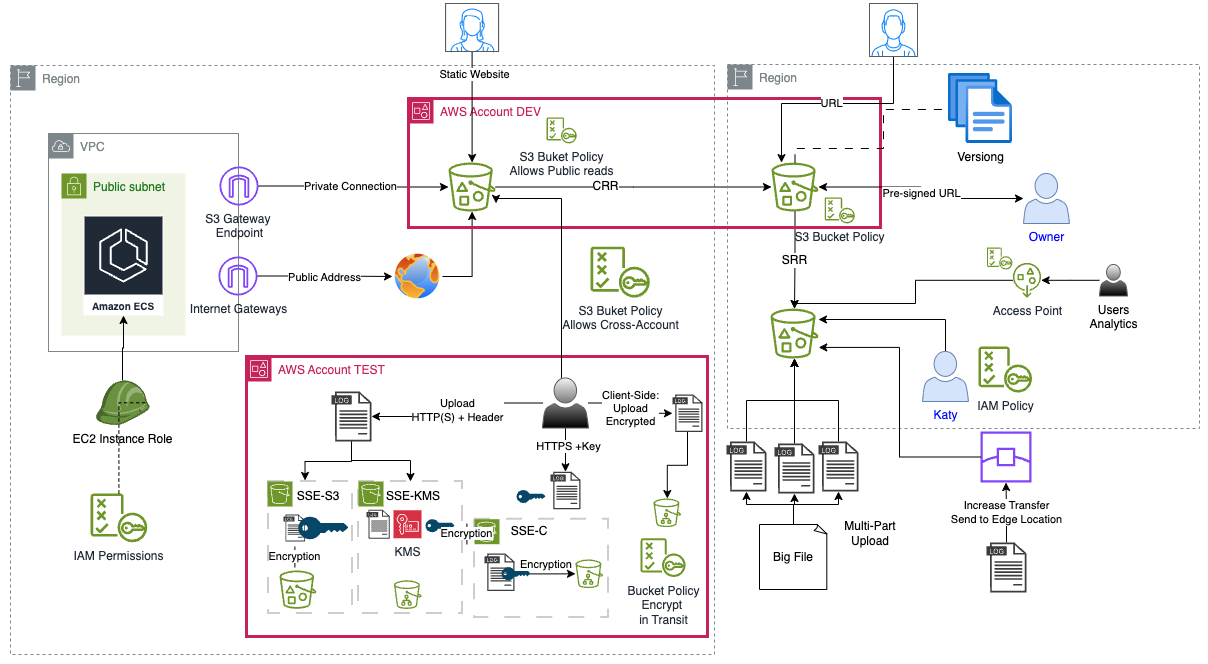

- Encrypting S3 Object:

- Types:

- in transit: SSL/TLS; HTTPS (Buket policy can force encryption)

- at Rest (server-side): SSE-S3 (AES 256-bit -> default -> all objects); SSE-KMS (advantages: user control + audit key usage using CloudTrail); SSE-C: customer-provider keys (S3 does NOT store the encryption key you provide; must use HTTPS; key must be provided in header). Default encryption on a bucket will encrypt all new objects stored in the bucket

- at rest (client-side): encrypt before upload file

- Enforcing Server-side Encryption adding a parameter in PUT Request Header:

- x-amz-server-side-encryption: AES256

- x-amz-server-side-encryption: aws:kms

- PS: You can create bucket policy to denies any S3 PUT request without this parameter

- CORS: needs to be enabled

- Types:

Buckets are private by default. For securing a bucket with public access is necessary to allow public access to bucket and objects. It involves Object ACL (Individual Object level) and Bucket Policies (entire bucket level).

S3 is a good option to hosting a static website. It will scales automatically. For that, the bucket access should be public and you can add a policy to allow read permission for the objects. Other use cases can be: backup and storage, disaster recovery, archive, hybrid cloud storage, data lakes and big data analytics.

Amazon S3 Access Points, a feature of S3, simplify data access for any AWS service or customer application that stores data in S3. With S3 Access Points, customers can create unique access control policies for each access point to easily control access to shared datasets. You can also control access point usage using AWS Organizations support for AWS SCPs.

Lock

- S3 Object Lock: prevent objects from being deleted or overwritten for a fixed amount of time or indefinitely using WriteOnceReadMany (WORM) model.

- Governance Mode: overwrite or delete an object version only with special permissions

- Compliance Mode: even root cannot overwrite or delete protected object version for the duration of the retention period.

- Legal hold can be placed and removed by user with s3:PutObjectLegalHold permission

- Glacier Vault Lock: WORM. Deploy and enforce compliance controls for individual S3 Glacier valts with vault lock policy. Objects can be deleted.

Optimizing S3 Performance:

- Use Preifx (folders inside the bucket) to increase performance

- The use of KMS impact in performance because is necessary to call GenerateDataKey when upload a file and call Decrypt in KMS API to download a file. Prefer SSE-S3.

- Multipart Uploads can increate performance. It is recomended for files over 100MB and required for files over 5GB. The performance can be improved parallelizing uploads.

- S3 Byte-Range Fetches: Parallelize downloads by specifying bytes ranges

- S3 Transfer Acceleration: (CloudFront) Accelerate global uploads & downloads into Amazon S3; and Increase transfer speed to Edge Location (enables fast, easy, and secure transfers of files over long distances between your client and your Amazon S3 bucket)

Backup

- Versioning: stores all versions of an object; good for backup; once enable it cannot be disabled (only suspended); it can be integrated with lifecycle rules and supports MFA. For the static webpage, the last version will be available, not the previous. It can use Lifecycle Management rules to transit versions throgh tiers.

- Replication [1][2]:

- Replicate the object from one bucket to another and the version must be enables in both sides

- Deleted objects are not replicated by default

- Only new objects are replicated

- Cross-Region Replication (CRR): compliance, lower latency access

- Same-Region Replication (SRR): log aggregation, live replication

- Copy is asynchronous

-

Move Objects

- S3 sync command can be used to copy objects between buckets and lists the source and target buckets.

- Amazon S3 Batch Replication provides you a way to replicate objects that existed before a replication configuration was in place, objects that have previously been replicated, and objects that have failed replication. This is done through the use of a Batch Operations job.

- Standard: High Availability and durability; Designed for Frequent Access; Suitable for Most workflows; low latency and high throughput. Ex: Big Data analytics, mobile, gaming, content distribution.

- Standard-IA (Infrequent Access): Rapid Access; Pay to access the data; better to long-term storage, backups and disaster recover. Ex: disaster recover, backup. Comparing with Glacier, it is the best option if is necessary retrieves IA data immediately.

- One Zone-Infrequent Access: data stored redundantly inside a single AZ; Costs 20% less than Standard-IA (lower cost); Better to long-lived, IA, non-critical data. Ex: secondary backup copies of on-premises data.

- Intelligent-Tiering: Good to optimize cost. It is used to move the data into classes in a cost-efficient way if you don't know what is frequent or not. No performance impact or operational overhead. More expensive than Standard-IA

- Gacier: archive data; pay by access; cheap storage. You can use Server-side filter and retrieve less data with SQL

- Gacier Instant Retrieval (minimum duration - 90 days)

- Glacier Flexible Retrieval -> not need immediate access, retrieve large sets of data at no cost (backup, disaster recover)(minimum duration - 90 days)

- Glacier Deep Archive -> Cheapest -> data sets for 7 -10 years (12h). (minimum duration - 180 days)

Last notes

- S3 Event Notification: enables you to receive notifications when certain events happen in your bucket. To enable notifications, you must first add a notification configuration that identifies the events you want Amazon S3 to publish and the destinations where you want Amazon S3 to send the notifications. An S3 notification can be set up to notify you when objects are restored from Glacier to S3. It can be trigger to SNS, SQS, Lambda, EventBridge (archive, replay events, reliable delivery).

- S3 Storage Lens: Tools to analyse S3

- S3 Access Log: A new bucket will store the logs. They must be in the same region.

- S3 Object Lambda

Loosely Coupled

The AWS recommendation for architecture is Loosely Coupling. It can be achieve by ELB and multiple instances. However, in some scenarios ELB may not be available. For this, other resources can be used to achive that. Here are some services that go on this direction.

SQS (cloud native service) [1][2]:

- Fully managed message that use queue for decouple and scale microservices, distributed system and serverless application.

- Retention of message: default is 4 days, maximum 14 days, the minimun can be customized (e.g.,1 minutes).

- Deletion: after the retention period or to be read.

- Pay-as-you-go pricing.

- Asynchronous process.

- Settings: Delivery delay (0 up to 15 minutes)

- Message size: up to 256KB of text

- Require Message Group ID and Message Deduplication ID

- AWS recommend using separate queues when you need to provide prioritization of work

- Short polling and long polling are used to control the amount of time the consumer process waits before closing the API call and trying again.

- Security

- Encryption: message are encrypted in trasit (HTTPS) by defaul but not at rest (can do using KMS)

- Access Control with IAM policy - SQS API

- SQS Access policy with resource policy - useful for cross-account or other services

- Strategy:

- FIFO: Guaranteed ordering, no message duplication, 300 trasanction per second (can achieve 3000 with baching). FIFO High throughput process up to 9000 transaction per second, per API without batching; and up to 90000 messages using batching APIs.

- Standard: better performance; the order message can be implemented; message can be duplicated; can use message group ID to process the message in order based on the group; unlimited throughput

- In scenarios of real-time should use Kinesis instead of SQS

DLQ (Dead-Letter Queues):

- Target for messages that cannot be processed sussessfully.

- It works with SQS and SNS.

- Useful for debugging applications and messaging systems.

- Redrive capability allows moving the message back into the source queue.

- It is a SQS queue and use FIFO.

- Benfits: can use alarms

- Publicly accessible by default

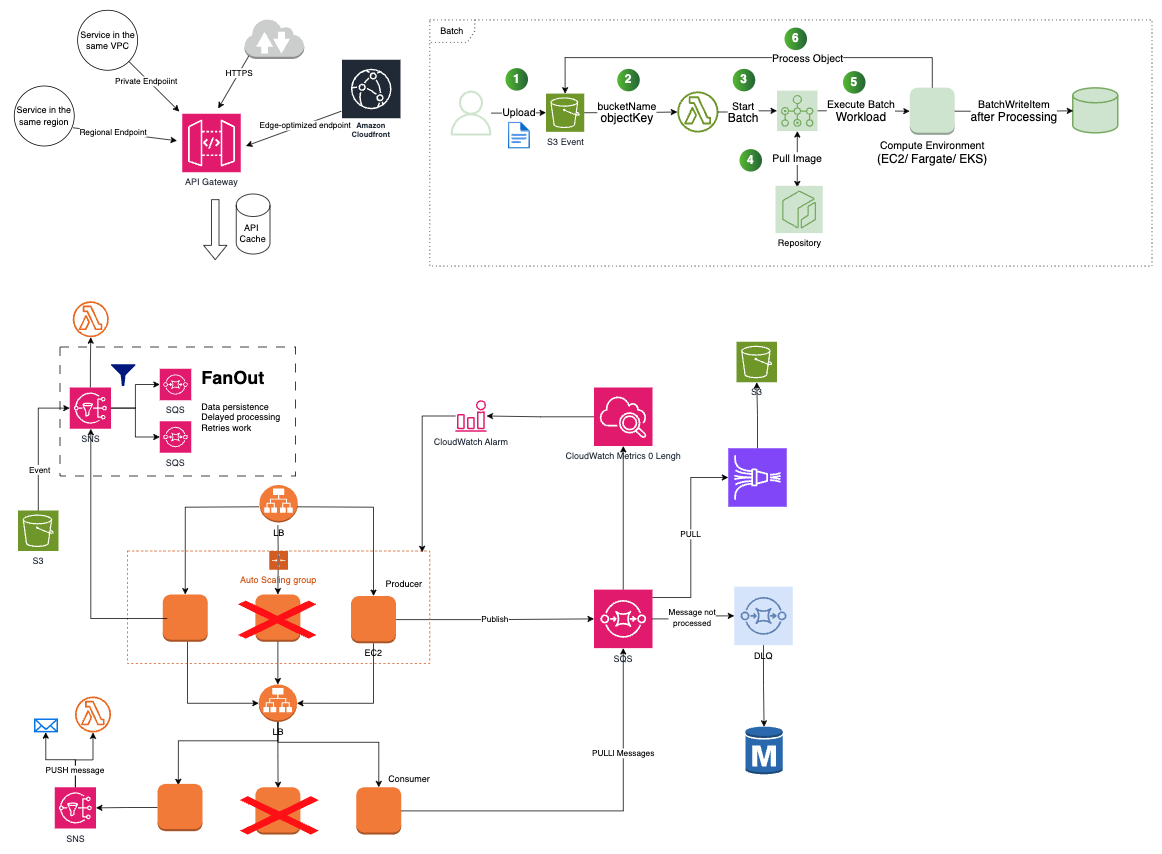

SNS (cloud native service) [1][2] :

- Push-based messaging service.

- Delivery messages to the endpoints that are subscribed.

- Fully managed message for application-to-application (A2A) and application-to-person (A2P).

- Cycle: Publisher -> SNS topic / Subscriber -> get all messages from the topic

- Subscribers: Kinesis, SQS, Lambda, emails, SMS and endpoints

- Size: 256KB of text

- Extended Library allows sending messages up to 2GB. The payload is stored in S3 and then SNS publishes a reference to the object.

- DLQ Support

- FIFO or Standard

- Security

- Encryption in transit by default (HTTPS) and can add at rest via KMS

- Access Policies: can be attached a resource policy, useful across-account access

- FanOut (SNS+SQS) [1] : messages published to SNS topic are replicated to multiple endpoint subscription (1:N)

- Message Filtering: send to specific subscriber

- Publicly accessible by default

Amazon MQ [1]:

- Message broker

- Good when migrating to the cloud

- Supports ActiveMQ or RabbitMQ

- Topics and queues

- 1:1 and 1:N message design

- AmazonMQ requires private network (VPC, Direct Connect or VPN)

- Not scale as SNS or SQS

- Runs on servers and can run in multiple AZs with failover

- Fully managed service for developers; easy for developers create, publish, maintain, monitor, and secure APIs at any scale

- Restful and WeSocket

- Front door of the application.

- Integrate with lambda, HTTP, endpoints, etc

- Features:

- Security - protect endpoints by attaching a WAF (Web Application Firewall);

- Stop abuse - users can easily implements DDoS protection and rate limiting to curb abuse of their endpoints;

- Ease of Use

- Enpoint Types:

- Edge-optimized - (default option): API requests get sent through a CloudFront edge location (best for global users);

- Regional - ability to also leverage with CloudFront; reduce latency; can be protected with WAF

- Private - only accessible via VPCs using interface VPC endpoint or Direct Connect

- Securing APIs: user authentication (IAM, roles, Cognito, custom authorizer); ACM certs for edge-optimized endpoints and regional endpoints; WAF

AWS Batch

- Run batch computing workloads within AWS (EC2 or ECS/Fargate): Fargate is more recomended because require fast start times (<30 sec), 16 VCPU or less, no GPU, 120 GiB of memory; EC2 needs more control, require GPU and custom AMIs, high levels of cuncurrency, require access to Linux Parameters

- Simple: Automatically provision and scale, no intallation is required

- AWS provisions the compute and memory. Customer only need submit or schedule the batch job.

- Components: Jobs; Jobs definition (how jobs will run); Jobs Queues; Compute Environment

- Batch vs Lambda

- Time Limits: lambda has 15 minutes execution time limit; batch does not have this

- Disk space: lambda has limited disk space, and EFS requires functions live within a VPC

- Runtime limitations: lambda is fully serverless, but limited runtimes; and Batch can use any runtime because uses docker

Step Function [1]

- Coordenate distributed apps

- Orchestration service; graphical console;

- Main componenets: state machine (workflow with different event-driven steps) and tasks (specific states within a workflow (state machine) representing a single unit of work). State is every single step within a workflow

- Execution: instances where you run your workflows in order to perform your task

- Types:

- Standard: one execution, can run upto one year, useful for long-running workflow - auditable history; up to 2000 executions per second; pricing based per state transition

- express: at least one execution, can run for up to five minutes, useful for high-event-rate workflows, e.g IoT data streaming; pricing based on number of execution, durations and memory

AppFlow [1]:

- Integration service for exchanging data between SaaS apps and AWS services;

- Pulls data records from third party SaaS vendors and stores them in S3;

- Bi-directional data transfer

- Transfer up to 100 gibibytes per flow, and this avoids the Lambda function timeouts

- Data mapping (how sources data is stored);

- Filter (controls which data is transferred);

- Trigger (how the flow is started)

- Use case: salesforce records to Redshift; analyzing conversations in S3; migration to Snowflake

Serverless Applications

- FaaS

- Example of integration: ELB, API Gateway, Kinesis, DynamoDB, S3, CloudFront, CloudWatch, SNS, SQS, Cognito

- Virtual functions

- By default, Lambda function is launched outside of VPC, but it can be done in VPC

- Synchronous: CLI,SDK, API Gateway

- Asynchronous: S3, SNS, CloudWatch, etc

- Run on-demand

- Scaling automatically

- Event-driven

- Lambda needs IAM role to access AWS APIs

- Can be monitoring through CloudWatch

- Pricing: Pay per call (request) and duration (time of execution). Free tier of 1.000.000 requests and 400.000 GB of compute per month. After that, pay per request.

- Compute:

- 1K councurrent execution

- Short-term execution (900 seconds - 15minutes). If is necessary more time, use RC2, Batch, EC2

- Storage:

- 512MB-10GB disk storage (integration with EFS);

- 4KB for all environment variables;

- 128MB-10GB memory allocation

- Easily set memory - up to 10.240MB, and CPU scales proportionaly with memory

- Deployment and configuration

- Compressed deployment package <= 50MB

- Uncompressed package <= 250MB

- Request and response payload size up to 6BM

- Streamed responses up to 20MB

- Lambda@Edge: function attached to CloudFront to run close the user and minimize latency

Serverless Application Repository

- It allows users find, deploy, publish their own serverless application

- Privately share applications

- Deeply integrated with Lambda service

- Options:

- Publish: makes apps available for others to find and deploy;SAM templates helps to define apps; private by default

- Deploy: Find and deploy published apps; Browse public apps wihtout an AWS account; Browse withoin Lambda Console

Aurora Serverless

- On-Demand and Auto Scaling for Aurora database

- Automation of monitoring workloads and adjusting capacity for database

- Pricing: charged for resources consumed by DB cluster

- Concepts: Aurora Capacity Units (ACU - how the cluster scale); allocated by AWS-managed warm pools; 2GiB of memory, matching CPU and networking capability; resiliency (six copies of data across three AZs)

- Use case: variable workflows; multi-tentant apps (service manage capacity for each app); new apps; dev and test new features; mixed use apps; capacity planning

AWS AppSync: (GraphQL): store and sync data between mobile and web app. Robust, scalable GraphQL Interface for application developers; combines data from multiple sources; enable integration for developers via GraphQL (data language used by apps to fetch data from servers)

SWF [1]

Containers

Here are serverless services for containers [1].

Orchestrations

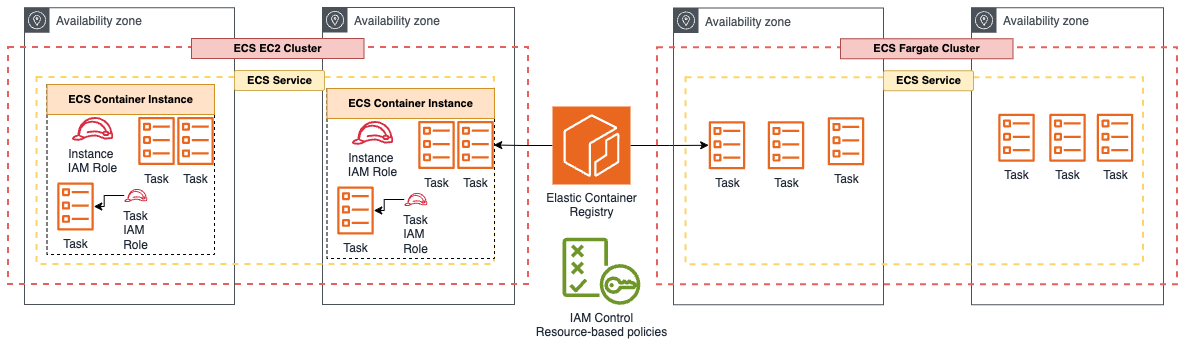

- ECS (Elastic Container Service) [2]

- ECS Launch Types: EC2 and Fargate

- Components:

- Cluster: logical grouping of tasks or services. It can have ECS Container instances in different AZ

- Task: a running Docker container. To specify permissions for a specific task on ECS you should use IAM Roles for Tasks. The taskRoleArn parameter is used to specify the policy.

- Container instance: EC2 instance running the ECS agent

- Service: Defines long running tasks. It can control number of tasks with Auto Scaling, and attach an ELB for distrubute traffic across containers

- Why: ECS manages anywhere many containers; ECS will place the containers and keep them online; containers are registered with LB; containers can have roles attached to them; easy to set up and scale

- Best used when all in on AWS

- ECS Pricing

- Shared responsibility

- AWS start and stop the containers

- Customer has to provision and maintain the infrastructure (EC2 instance).

- ECS anywhere:

- on-Premise, no orchestration, completely managed, Inboud traffic has to be managed separately (no ELB support)

- Requirement: SSM Agent, ECS Agent, Doker; register external instances; installation script within ECS console; execute scripts on on-premises VMs; deploy containers using EXTERNAl launch type

- EKS (Amazon Elastic Container Service for Kubernetes) [3]:

- Manage Kubernetes clusters on AWS

- Can be used on-premise and the cloud

- Best used when is not all in on AWS

- More work to configure and integrate with AWS

- EKS-D: managed by developer. Self-managed Kubernetes deployment (EKS anywhere)

- EKS anywhere:

- on-Premise EKS, EKS Distro (deployment, usege and management for cluster), full lifecycle management

- Concepts: control plane, location, updates (manual CLI), Curated Packages, Enterprise Subscription

Fargate [1]

- Serverless compute engine for Docker container

- AWS manage the infrastructure

- Works with ECS and EKS

- Benefits: no OS access, pay based on resources allocated and time ran (pay for vCPU and memory allocated - pricing model); short-running task; isolated environment per container; capable of mounting EFS file system for persistent, shared storage. In some use cases it can be advantage comparing with EC2.

- Comparing with Lambda, select Fargate when the workload is more consistent (predictable). Also, Fargate allows docker use across the organization and some control by developer. By other hand, lambda is better to unpredictable or inconsistent workload; good for a single funcion.

- It is for containers and applications that need to run longer

- Shared responsibility

- AWS: Automatically provision resources. AWS runs the containers for the customer.

- The customer don't need provision the infrastructure.

ECR - Elastic Container Registry

- Managed container image registry

- Secure, scalable, reilable infrastructure

- Private conteiner image repository

- Components: Registry (private); Authorization token (to push and pull images to and from retristries); Repository; Images

- Secure: permission via IAM; repository policy

- Cross-Region; Cross-account; configured per repository and per region

- Store customer images to be runned by ECS or Fargate

- Integration with customized container infrastructure, ECS, EKS, locally (linux - for development purpose)

- Use rules to expire and remove unsused images

- Scan on push repository can identify vulnerabilities

AWS database services

It's possible to install database in EC2 instance. It can be necessary when is needed full control over instance and database; and using a third-party database engine [1][2].

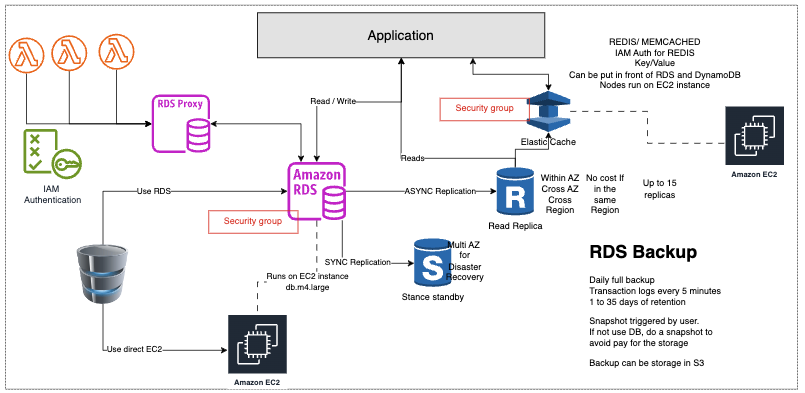

RDS - Amazon Relational Database Service[1][2][3].

- Use EC2 instance

- Benefits to deploy database on RDS instead EC2: hardware provision, database setup, Automated backup and software patching. It reduce the database administration tasks. There is no need to manage OS.

- RDS types for it: SQL Server, Oracle, MySQL, PostgreSQL, MariaDB

- It's possible encrypt the RDS instances using AWS Key Management Service (KMS) and snapshot

- Amazon RDS creates an SSL certificate and installs the certificate on the DB instance when it provisions the instance. You can download a root certificate from AWS that works for all Regions or you can download Region-specific intermediate certificates and connect to RDS DB instance. It ensure SSL/TLS encryption in transit. The certificates are signed by a certificate authority. You cannot use self-signed certificates with RDS.

- You cannot enable/disable encryption in transit using the RDS management console or use a KMS key.

- Sales up by increaing instance size (compute and storage)

- Replicas is only to ready. It improves database scalability.

- Read Replicas: read-only copy of the primary database. It can be cross-AZ and cross-region. Not used for recovery disaster, only for performance. It requeres Automatic backup. You can only create read replicas of databases running on RDS. You cannot create an RDS Read Replica of a database that is running on Amazon EC2.

- Multi-AZ: when a Multi-AZ DB instance is provisioned, RDS automatically creates a primary DB Instance and synchronously replicates the data to a standby instance in a different Availability Zone (AZ). RDS will automatically failover to the standby copy.

- It can use Auto scaling to add replicas

- Serveless

- You can't use SSH to access instances.

- It is suited for OLTP workloads (real-time)

- Security through IAM, Security Groups, KMS, SSL in transit

- Support for IAM Authentication (IAM roles)

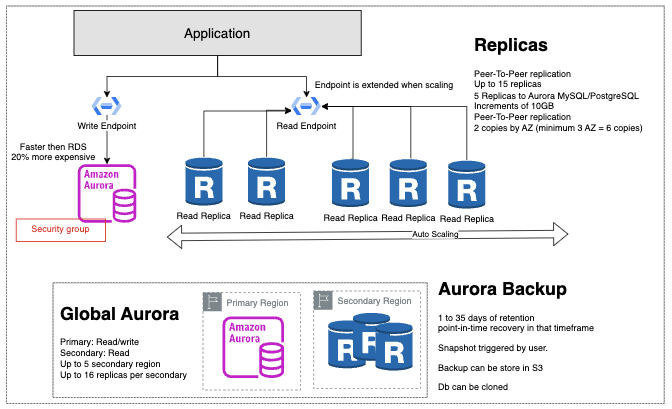

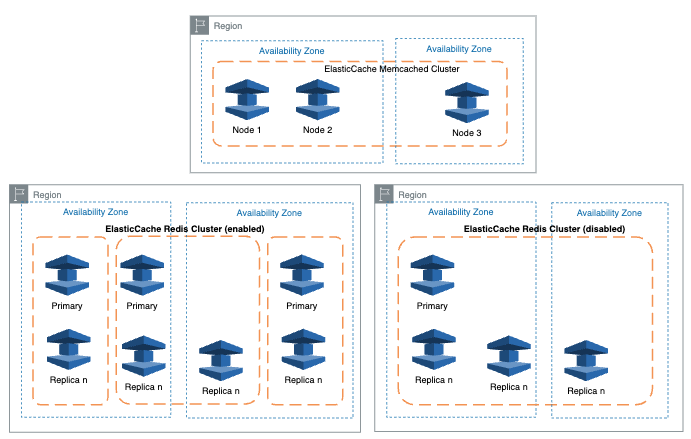

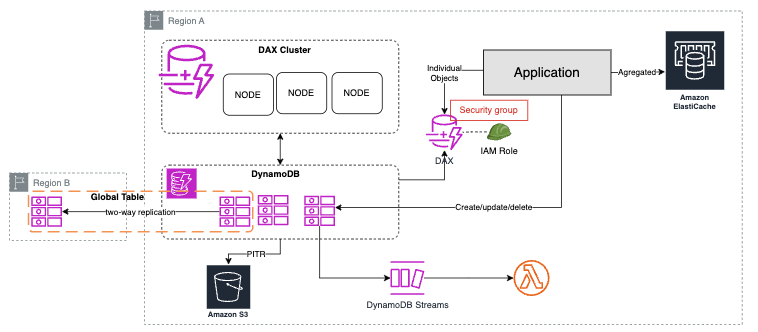

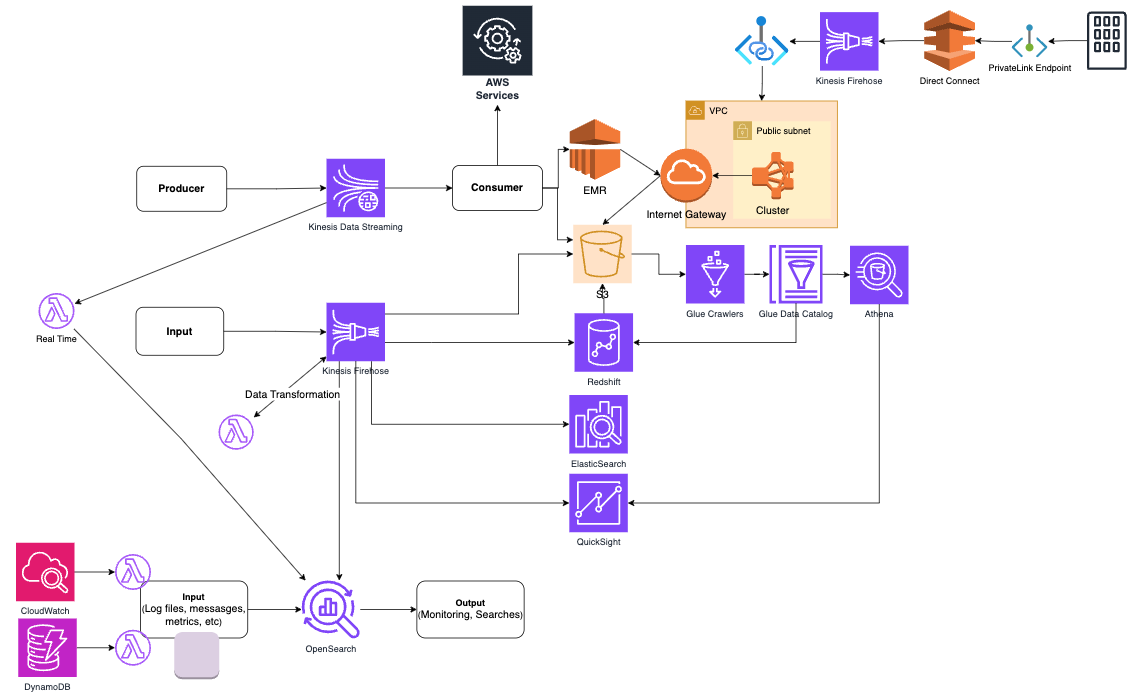

- RDS Proxy: Allows app to pool and share DB connections improving db efficience, as well reduce the failover. It makes applications more scalable, more resilient to database failures, and more secure